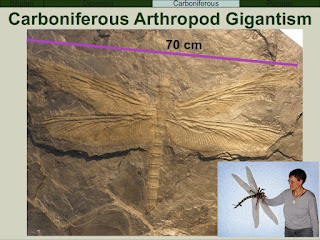

Today, the biggest insects are goliath beetles, atlas moths, and giant stick insects. But during the carboniferous period (360 -300 million years ago), there were millipedes that were 2 m (6 ft) long and dragonflies (order Protodonata) the size of eagles!

Today’s question is:

How did insects get so big, and if they were big once, why are they so much smaller now?

It wasn’t just the insects that grew large way back then, the first large plants flourished in this same time period. Some ferns grew to be 20 m (65 ft) or more in height, and the diameters of trunks were increased. While not as big as today’s largest plants, the change was significant, as plants before this period did not exceed 3 to 5 ft in height.There was plentiful carbon dioxide in the atmosphere and the environment was warm all year round. This allowed lots of photosynthesis and lots of growth.

Plants were evolving lignan in this carboniferous period (carbonis = coal, and ferrous = producing). Lignan is the stiffest of the plant molecules and is what allows them to grow tall. This is also what gives the carboniferous period its name, as the lignan of plants is the major component of the coal that formed from their remains.

Horsetails, another type of plant of the carboniferous age and which are still around today, also grew much bigger. Horsetails today do not get any taller than about 1 m (3 ft), but in the carboniferous period, they were often 10-15 m (33-48 ft) tall.

Butit was the arthropods that get all the publicity. Scorpions almost a meter in length deserve to have a lot of attention paid to them! And consider the yuck factor of a 7 inch cockroach scurrying around at your feet.

What allowed these animals to grow so large? Scientists think it was related to the lignan. With lignan, the plants could grow larger and support more photosynthetic material. The carboniferous period is when the first forests appeared.

With more and bigger plants, more carbon dioxide was converted to carbohydrate, and more oxygen was produced as a result. The oxygen content of the air in the carboniferous period reached levels of 35% or more (today it is about 21%).

More oxygen in the air meant that more oxygen could be transferred into the blood of animals. They could carry out more oxidative phosphorylation and produce more cellular energy (ATP), especially since there was all this plant material around to eat to gain carbohydrates (or plant-eaters to hunt down and eat). This growth spurt especially applied to animals without traditional circulatory systems. Insects, for instance.

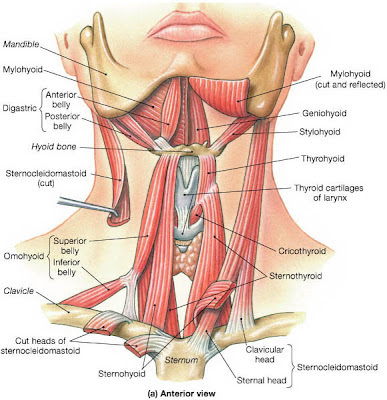

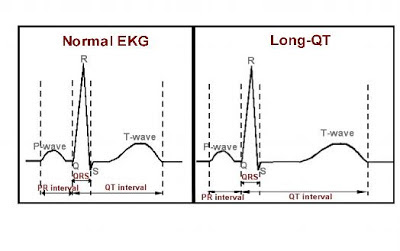

Insectsuse spiracles on the sides of their bodies to take in air. The oxygen and other gases are moved through a system of smaller and smaller tubes (called trachea) to bring the oxygen to all the cells of the body. The carbon dioxide produced during cellular respiration is removed in the same way.

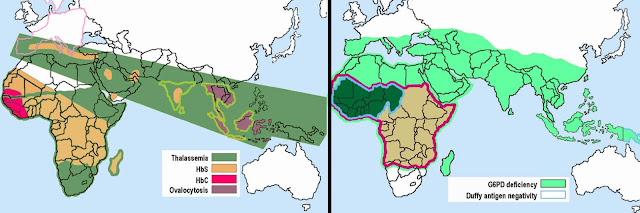

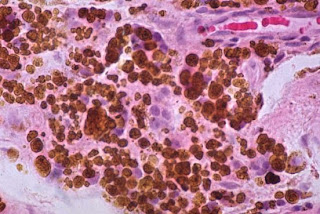

|

The spiracles of a flea are on the side of its abdomen and the air travels through the tracheae to bring oxygen to every cell. It seems like he would drown if he took a dip in the hot tub. |

This is not a particularly efficient way to move gases in and out of cells. A slightly bigger bug must have a much more voluminous system of trachea, and at some point, the respiratory system would have to be bigger than the entire volume of the insect! There would be no room for all the other organs. But with a high concentration of oxygen, the spiracle/tracheae system is efficient enough, and the insects can grow very large.

Highoxygen in the air also meant high oxygen in the water. Carboniferous era fish and amphibians grew large as well. Some toothed fishes of this time were impressive predators, and were more than 7 meters (23 ft) in length. Isopods in the oceans were also huge. Even today some of these crustaceans can be pretty impressive. Bathynomus giganteus can grow to more than over 16 inches in length.

So big plants brought big oxygen levels, which brought big animals. But why are the arthropods so much smaller today as compared to then? Well, the oxygen levels are lower now, so according to a 2006 study the inefficient spiracles system could not support the large body. Insects had to get smaller.

But there is an additional hypothesis that may also contribute to the small size of many insects, especially flying insects. According to a 2012 study, the size of flying insects is related to another aspect of oxygen. When the explosion of plants in the carboniferous period raised the oxygen levels, the air became more dense (oxygen is a heavier gas). The insects were able to become better fliers, since their wings could move more air.

Millionsof years later, birds evolved. As they became better fliers (their ability was also based on their ability to move air over their wings), they became better hunters. Better hunting birds could catch flying insects (and terrestrial insects for that matter) better. So it became a disadvantage to be big. The smaller insects now had a reproductive advantage; they were the only ones surviving to have offspring. Over a period of time, the insects grew smaller on the whole.

So today we have fairly small arthropods and insects, although my wife has personally never seen a small insect. According to her they are all large enough to carry off small children and have evil looks in their eyes.

Matthew E. Clapham1 and Jered A. Karr (2012). Environmental and biotic controls on the evolutionary history of insect body size Proc. Natl. Acad. Sci. USA DOI: 10.1073/pnas.1204026109

Next week – ideas for long studies on the nature of science.