Biology concepts – elements, biomolecules, biochemistry, trace elements, selenocysteine, stop codon

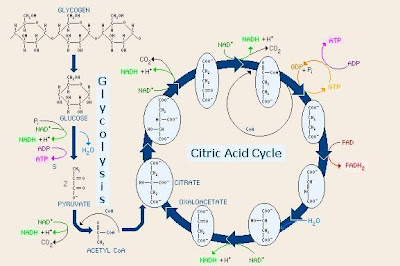

Biochemistry refers to how information flows through organisms via biochemical signaling and how chemical energy flows through cells via metabolism. All life on Earth uses basically the same biochemistry since we all came from a common ancestor – to the best of our knowledge.

A blue whale is the largest animal on the face of the Earth - ever. You could swing a tennis racquet while standing inside a chamber of its heart (left). Built very differently, the watermeal plant is the size of a grain of salt. Comparing the two organisms at the macrolevel is like comparing lug nuts and twinkies, or pink and Darth Vader. But looks are often deceiving.

At the genetic level, about 50% of the genes from whales and watermeal are exactly the same, coding for the same structural proteins or enzymes. At a biochemical level there's even more similarity; even if the gene products are different most of the processes that huge whales and tiny flowering plants carry out are exactly the same.

They are so similar for one overarching reason, and that reason points out an amazing commonality. Both the world’s largest animal and the world’s smallest flower come from a common ancestor. It may have been many moons since their family had that argument at the summer picnic that drove them apart forever, but they are still related nonetheless.

And since they have a common ancestor, they are going to harbor many of the same traits as that ancestor – including the ways they carry out the reactions and functions in their cells. The totality of the molecules that are present in an organism and how they interact to perform different jobs is termed an organism’s biochemistry.

Biochemistry refers to how information flows through organisms via biochemical signaling and how chemical energy flows through cells via metabolism. All life on Earth uses basically the same biochemistry since we all came from a common ancestor – to the best of our knowledge.

Organisms on Earth have similar biochemistry in part because they use the same types of macromolecules. Life as we know it is based on the interactions (biochemistry) of lipids, carbohydrates, proteins and nucleic acids. Each of these macromolecules is amazing and contains many exceptions, so we will deal with each in next few posts.

Whales and watermeal, all life for that matter, is organic(Greek, pertaining to an organ) since their biochemistry is based on carbon, but there many exceptions to our important molecules being organic. What is the most abundant molecule in living things? Water. Is water organic? No.

What creates the electrochemical gradient that fires our neurons? Sodium, chloride, and potassium. Are they organic? No. So the next time someone makes a joke about being a carbon-based life form, you can say you are just partly organic, and then let them ponder whether you are some kind of cyborg.

So what do the macromolecules have in common that is related to the biochemistry of life? They are made up of the same chemical elements. In fact, most all biomolecules are made up of just five or fewer different elements; carbon (C), hydrogen (H), oxygen (O), nitrogen (N), phosphorus (P), and sulfur (S).

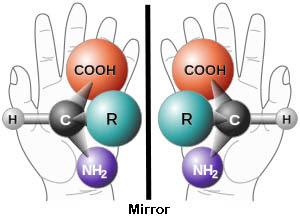

Carbon’s importance lies in its ability to bond to many different elements, and because it can accept electrons in a bond or donate electrons to a bond. Carbon can bond to four different elements at the same time. This increases the possibility of complexity and is one reason our molecules are based on carbon. The situations are similar for oxygen, sulfur, nitrogen, and phosphorous.

One of the lesser abundant elements is sulfur since it is used in proteins as a structural element mostly, although it shows up in bone and other skeletal materials as well. Still, the average adult male (80 kg/175 lb) contains about 160 grams of sulfur; this would be about a salt shaker’s worth.

Only two of the twenty common amino acids that make up proteins contains sulfur (methionine and cysteine). But don't minimize its importance just because it is present in only two of the protein building blocks. The sulfurs in proteins often interact with one another, determining the protein's three-dimensional structure. And for proteins, 3-D structure is everything - their function follows their form.

Sulfur is important in other ways as well. Some bacteria substitute sulfur (in the form of hydrogen sulfide) for water in the process of photosynthesis. Other bacteria and archaea use sulfur instead of oxygen as electron acceptor in cellular metabolism. This is one way organisms can be anaerobic(live without oxygen).

In a more bizarre example, sea squirts use sulfuric acid (H2SO4) in their stomachs instead of hydrochloric acid – just how they don’t digest themselves is a mystery. Just about every element has some off label uses; we could find weird uses for C, H, O, N, and P as well. Heck, nitric oxide (NO) works in systems as diverse as immune functions and vasodilation (think Viagra).

So these are the “elements of life” – right? Well, yes and no, you can’t survive without them, but you also can’t survive with only them. There are at least 24 different elements that are required for some forms of life. Two dozen exceptions to the elements of life rule – sounds like an area ripe for amazing stories.

Some of these exceptions are called trace elements, needed in only small quantities in various organisms. It may be difficult to define “trace” since some elements are needed in only small quantities in some organisms, but in great quantities (or not at all) in others. Take copper (Cu) for instance. Humans use it for some enzymatic reactions and need little, but mollusks use copper as the oxygen-carrying molecule in their blood (like we use iron).

Let’s start with a list is of the exceptions; a list will allow you to do some investigating on your own to see how they are used in biologic systems.

Aluminum (Al) 0.0735 g

Arsenic (As) 0.00408 g

Boron (B) 0.0572 gBromine (Br) 0.237 g

Cadmium (Cd) 0.0572 g

Calcium (Ca) 1142.4 g

Chlorine (Cl) 98.06 g

Chromium (Cr) 0.00245 g

Cobalt (Co) 0.00163 g

Copper (Cu) 0.0817 g

Fluorine (F) 3.023 g

Gold (Ag) 0.00817 g

Iodine (I) 0.0163 g

Iron (Fe) 4.9 g

Magnesium (Mg) 22.06 g

Manganese (Mn) 0.0163 g

Molybdenum (Mo) 0.00812 g

Nickel (Ni) 0.00817 g

Potassium (K) 163.44 g

Selenium (Se) 0.00408g

Silicon (Si) 21.24 g

Sodium (Na) 114.4 g

Tin (Sn) 0.0163 g

Tungsten (W) no level given for humans

Vanadium (V) 0.00245 g

Zinc (Zn) 2.696 g

You can see that for each element I gave a mass in grams. This corresponds to the amount that can be found in an 80 kg (175 lb) human male. But don’t confuse the mass found with the mass needed.

Barium (Ba) isn’t used in any known biologic system, yet you have some in your body. It is the 14th most abundant element in the Earth’s crust, so it can enter the food chain via herbivores or decomposers and then find its way up to us. You probably have a couple hundredths of a gram in you right now.

Bromine (Br) is a crucial element for algae and other marine creatures, but as far as we know, mammals don’t need any. In fact, this brings up an interesting thing about chemistry. Chlorine is integral for human life, just about anything that requires an electrochemical gradient will use chlorine, to say nothing of stomach acid (HCl).

However, chlorine gas is a chemical weapon that will burn out your lungs (and did in WWI). Bromine gas is very similar to chlorine gas - so elements that are useful as dissolved solids can be lethal as gasses.

How about something supposedly inert, like gold (Ag)? We use it for jewelry because it is rare and supposedly it doesn’t cause allergy (wrong - see this previous post). But some bacteria have an enzyme for which gold is placed in the active center. Gold is rare, so why would it be used for crucial biology? Most elements in biology are more common.

Finally, we should describe a couple of the uses of non-standard elements:

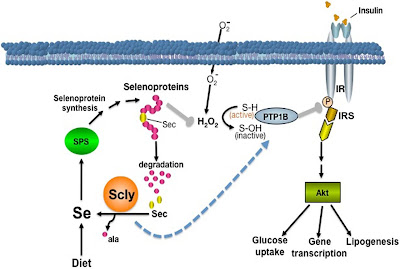

Selenium is a rare element, being only the 60thmost common element in the Earth’s crust. Yet, without 0.00408 grams of selenium on board, a human is only so much worm food. Selenium is only essential for mammals and some higher plants, but it performs a unique role in those organisms.

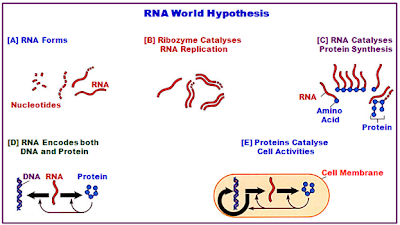

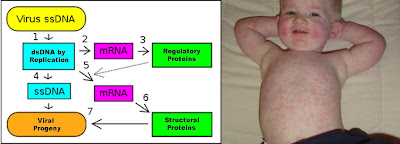

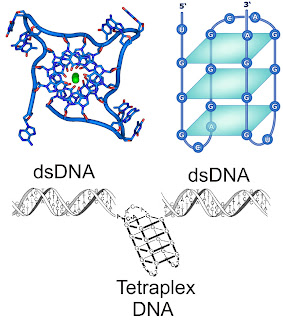

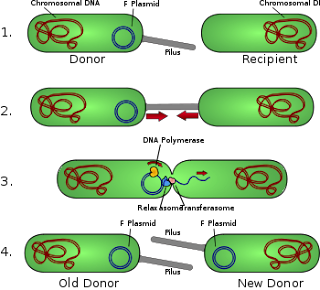

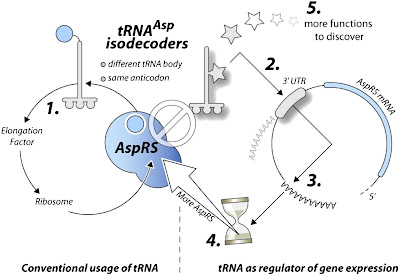

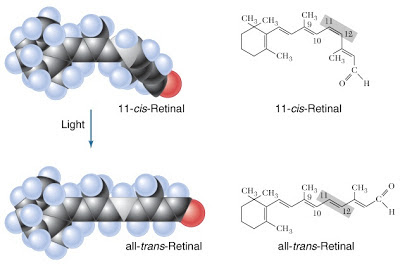

In a few proteins, particularly glutathione peroxidase, selenium will take the place of sulfur in certain cysteine amino acids. Selenocysteineis an amazing exception because it is not coded for by the genetic code! Instead, the stop codon, UGA, (a three nucleotide run which calls for protein production to stop), is modified to become a selenocysteine-coding codon.

The selenocysteine amino acid changes the shape of the protein, and is found to be the active site for proteins such as glutathione peroxidase and glutathione S-transferase. These enzymes are crucial for cellular neutralization of reactive oxygen molecules that do damage by reacting with just about any other cellular biomolecule.

So selenocysteine is an endogenous biomolecule that is important for protecting our bodies – as important as the antibiotics we use from other organisms. But a 2013 study shows that some antibiotics (doxycycline, chloramphenicol, G418) actually interfere with the production of selenocysteine proteins by inhibiting the modification of the UGA codon. In many cases, the amino acid arginine is inserted instead of selenocysteine, reducing the functionality of the enzymes. Yet another reason to not overprescribe antibiotics.

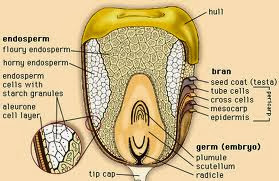

One last exceptions - silicon is important for many grasses. Remember, this is silicon, the element; not silicone the polymer used in breast implants and caulk; and not silica, the mineral SiO2. Silicon is taken up by grasses of many types; crops, weeds, and water plants (although silicon in grasses may take the form of silica).

In some grasses, the inclusion of silicon makes them less likely to be victims of herbivory (being grazed on by herbivores). Herbivores avoid high silica-containing grasses because they aren’t digested well. A 2008 study showed that this reduced digestibility is related to silicon-mediated reduction in leaf breakdown through chewing and chemical digestion.

Another protective function of silicon in grasses was illustrated by a 2013 study. In halophytic (salt-loving) grasses that live on seashores, increased silicon uptake resulted in increased nutrient mineral uptake, and increased transpiration, the crucial process for water movement through the plant.

In addition, these plants have better salt tolerance in the presence of increased silicon, even though they already have specific mechanisms for reducing the damage that could be induced by such high salt concentrations. Silicon reduced the amount of sodium element found in the saltwater grasses. Pretty important for an element that is considered non-essential.

Next week, let’s start to look at the biomolecules made from C, H, O, N, P, and S. Proteins are macromolecules made up of amino acids, and amino acids are exceptional.

Tobe R, Naranjo-Suarez S, Everley RA, Carlson BA, Turanov AA, Tsuji PA, Yoo MH, Gygi SP, Gladyshev VN, & Hatfield DL (2013). High error rates in selenocysteine insertion in mammalian cells treated with the antibiotic doxycycline, chloramphenicol, or geneticin. The Journal of biological chemistry, 288 (21), 14709-15 PMID: 23589299

Mateos-Naranjo E, Andrades-Moreno L, & Davy AJ (2013). Silicon alleviates deleterious effects of high salinity on the halophytic grass Spartina densiflora. Plant physiology and biochemistry : PPB / Societe francaise de physiologie vegetale, 63, 115-21 PMID: 23257076

For more information or classroom activities, see:

Elements of life -

For more information or classroom activities, see:

Elements of life -

Trace elements in diet –

Trace elements in plants –

What is biochemistry –

Sulfur –

Bromine –

Selenium/selenocysteine –

Silicon based life –